Qwen3-Max-Thinking state-of-the-art reasoning model at your fingertips!

black-forest-labs/

FLUX-2-dev

$0.01

x (width / 1024) x (height / 1024) x (iters / 28)

Brand-new Flux2 Dev introduces a faster, more modular architecture for next-generation image generation pipelines. It delivers improved performance, cleaner control APIs, and a significantly more flexible development workflow for custom inference setups.

Input

Prompt

text prompt

You need to login to use this model

LoginSettings

Num Inference Steps

number of denoising steps (Default: 28, 1 ≤ num_inference_steps ≤ 100)

Width

image width in px (Default: 1024, 128 ≤ width ≤ 1920)

Height

image height in px (Default: 1024, 128 ≤ height ≤ 1920)

Seed

random seed, empty means random (Default: empty, 0 ≤ seed < 18446744073709552000)

Guidance Scale

classifier-free guidance, higher means follow prompt more closely (Default: 2.5, 0 ≤ guidance_scale ≤ 20)

Match Image Size

If set, the generated image will match the size of the input image at this index (0-based). (Default: empty, 0 ≤ match_image_size < 4)

Please upload an image file

Please upload an image file

Please upload an image file

Please upload an image file

FLUX.2

by Black Forest Labs: https://bfl.ai.

Documentation for our API can be found here: docs.bfl.ai.

This repo contains minimal inference code to run image generation & editing with our FLUX.2 open-weight models.

FLUX.2 [dev]

FLUX.2 [dev] is a 32B parameter flow matching transformer model capable of generating and editing (multiple) images. The model is released under the FLUX.2-dev Non-Commercial License and can be found here.

Note that the below script for FLUX.2 [dev] needs considerable amount of VRAM (H100-equivalent GPU). We partnered with Hugging Face to make quantized versions that run on consumer hardware; below you can find instructions on how to run it on a RTX 4090 with a remote text encoder, for other quantization sizes and combinations, check the diffusers quantization guide here.

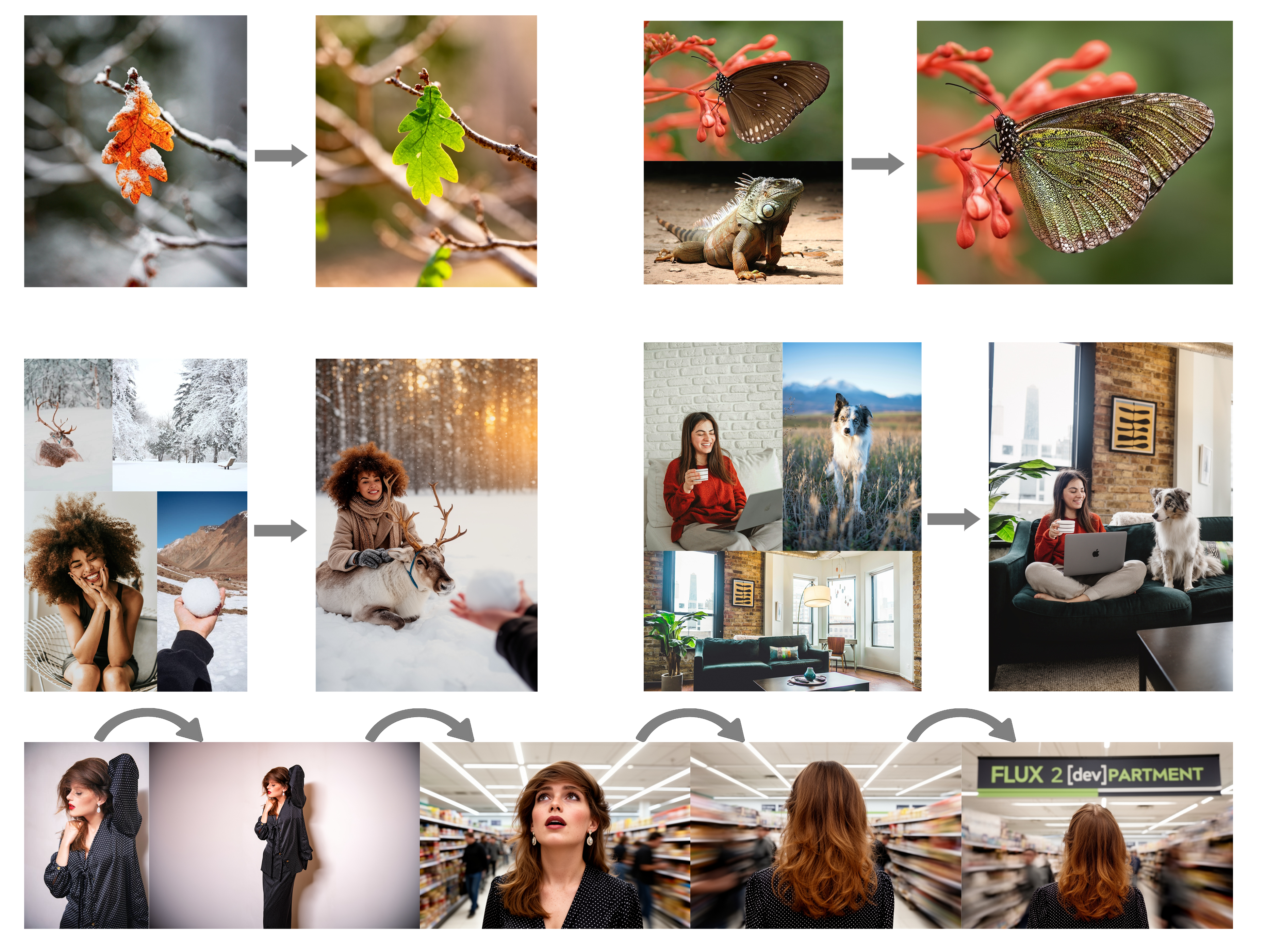

Text-to-image examples

Editing examples

Prompt upsampling

FLUX.2 [dev] benefits significantly from prompt upsampling. The inference script below offers the option to use both local prompt upsampling with the same model we use for text encoding (Mistral-Small-3.2-24B-Instruct-2506), or alternatively, use any model on OpenRouter via an API call.

See the upsampling guide for additional details and guidance on when to use upsampling.

FLUX.2 autoencoder

The FLUX.2 autoencoder has considerably improved over the FLUX.1 autoencoder. The autoencoder is released under Apache 2.0 and can be found here. For more information, see our technical blogpost.

Citation

If you find the provided code or models useful for your research, consider citing them as:

@misc{flux-2-2025,

author={Black Forest Labs},

title={{FLUX.2: Frontier Visual Intelligence}},

year={2025},

howpublished={\url{https://bfl.ai/blog/flux-2}},

}

© 2026 Deep Infra. All rights reserved.