We use essential cookies to make our site work. With your consent, we may also use non-essential cookies to improve user experience and analyze website traffic…

Qwen3-Max-Thinking state-of-the-art reasoning model at your fingertips!

Did you just finetune your favorite model and are wondering where to run it? Well, we have you covered. Simple API and predictable pricing.

Put your model on huggingface

Use a private repo, if you wish, we don't mind. Create a hf access token just for the repo for better security.

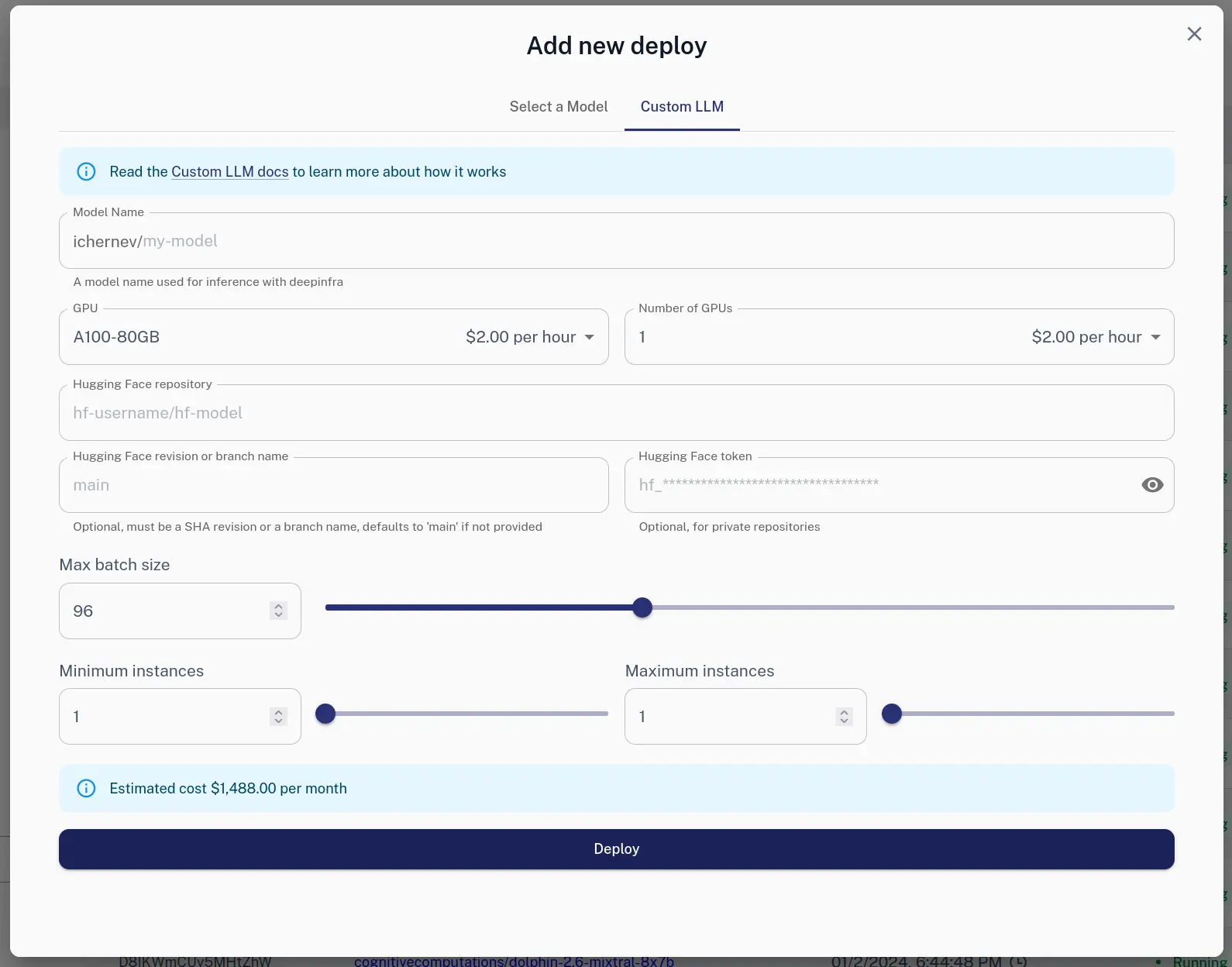

Create custom deployment

Via Web

You can use the Web UI to create a new deployment.

Via HTTP

We also offer HTTP API:

curl -X POST https://api.deepinfra.com/deploy/llm -d '{

"model_name": "test-model",

"gpu": "A100-80GB",

"num_gpus": 2,

"max_batch_size": 64,

"hf": {

"repo": "meta-llama/Llama-2-7b-chat-hf"

},

"settings": {

"min_instances": 1,

"max_instances": 1,

}

}' -H 'Content-Type: application/json' \

-H "Authorization: Bearer YOUR_API_KEY"

Use it

curl -X POST \

-d '{"input": "Hello"}' \

-H 'Content-Type: application/json' \

-H "Authorization: Bearer YOUR_API_KEY" \

'https://api.deepinfra.com/v1/inference/github-username/di-model-name'

For in depth tutorial check Custom LLM Docs.

Related articles Building Efficient AI Inference on NVIDIA Blackwell PlatformDeepInfra delivers up to 20x cost reductions on NVIDIA Blackwell by combining MoE architectures, NVFP4 quantization, and inference optimizations — with a Latitude case study.

Building Efficient AI Inference on NVIDIA Blackwell PlatformDeepInfra delivers up to 20x cost reductions on NVIDIA Blackwell by combining MoE architectures, NVFP4 quantization, and inference optimizations — with a Latitude case study. Qwen API Pricing Guide 2026: Max Performance on a Budget<p>If you have been following the AI leaderboards lately, you have likely noticed a new name constantly trading blows with GPT-4o and Claude 3.5 Sonnet: Qwen. Developed by Alibaba Cloud, the Qwen model family (specifically Qwen 2.5 and Qwen 3) has exploded in popularity for one simple reason: unbeatable price-to-performance. In 2025, Qwen is widely […]</p>

Qwen API Pricing Guide 2026: Max Performance on a Budget<p>If you have been following the AI leaderboards lately, you have likely noticed a new name constantly trading blows with GPT-4o and Claude 3.5 Sonnet: Qwen. Developed by Alibaba Cloud, the Qwen model family (specifically Qwen 2.5 and Qwen 3) has exploded in popularity for one simple reason: unbeatable price-to-performance. In 2025, Qwen is widely […]</p>

Fork of Text Generation Inference.The text generation inference open source project by huggingface looked like a promising

framework for serving large language models (LLM). However, huggingface announced that they

will change the license of code with version v1.0.0. While the previous license Apache 2.0

was permissive, the new on...

Fork of Text Generation Inference.The text generation inference open source project by huggingface looked like a promising

framework for serving large language models (LLM). However, huggingface announced that they

will change the license of code with version v1.0.0. While the previous license Apache 2.0

was permissive, the new on...

Building Efficient AI Inference on NVIDIA Blackwell PlatformDeepInfra delivers up to 20x cost reductions on NVIDIA Blackwell by combining MoE architectures, NVFP4 quantization, and inference optimizations — with a Latitude case study.

Building Efficient AI Inference on NVIDIA Blackwell PlatformDeepInfra delivers up to 20x cost reductions on NVIDIA Blackwell by combining MoE architectures, NVFP4 quantization, and inference optimizations — with a Latitude case study. Qwen API Pricing Guide 2026: Max Performance on a Budget<p>If you have been following the AI leaderboards lately, you have likely noticed a new name constantly trading blows with GPT-4o and Claude 3.5 Sonnet: Qwen. Developed by Alibaba Cloud, the Qwen model family (specifically Qwen 2.5 and Qwen 3) has exploded in popularity for one simple reason: unbeatable price-to-performance. In 2025, Qwen is widely […]</p>

Qwen API Pricing Guide 2026: Max Performance on a Budget<p>If you have been following the AI leaderboards lately, you have likely noticed a new name constantly trading blows with GPT-4o and Claude 3.5 Sonnet: Qwen. Developed by Alibaba Cloud, the Qwen model family (specifically Qwen 2.5 and Qwen 3) has exploded in popularity for one simple reason: unbeatable price-to-performance. In 2025, Qwen is widely […]</p>

Fork of Text Generation Inference.The text generation inference open source project by huggingface looked like a promising

framework for serving large language models (LLM). However, huggingface announced that they

will change the license of code with version v1.0.0. While the previous license Apache 2.0

was permissive, the new on...

Fork of Text Generation Inference.The text generation inference open source project by huggingface looked like a promising

framework for serving large language models (LLM). However, huggingface announced that they

will change the license of code with version v1.0.0. While the previous license Apache 2.0

was permissive, the new on...

© 2026 Deep Infra. All rights reserved.